In the rapidly evolving landscape of destination marketing, Artificial Intelligence (AI) has shifted from an emerging trend to a cornerstone of strategic decision-making and operational efficiency. This blog explores the profound implications of AI in enhancing visitor experiences and offers crucial insights from a recent Destinations International webinar.

Artificial Intelligence (AI) has emerged as a transformative technology that is forcing organizations to rethink their operating and competitive models. On April 18, 2024, a group of industry leaders discussed the impact of AI. In this blog, I discuss the key takeaways and opinions from the webinar, hosted by Destinations International and its Business Operations task force.

The Importance of AI for Destination Marketing

AI has rapidly ascended from an emerging trend in 2021 to the dominant global industry trend by 2023, as noted by DestinationNEXT's future study research (a strategic roadmap and global survey helping destinations optimize their relevance and value). This shift underscores AI's role in enhancing operational efficiency and strategic decision-making. Organizations now increasingly prioritize AI and recognize its potential to redefine their planning and engagement strategies.

Challenges & Regulatory Landscape

Two challenges exist when we dig deeper. First, we have to consider the risks involved. Second, we have to figure out a way of integrating this technology into our operating model.

AI risks can emerge from multiple sources: data, algorithms, architecture, or operations. Once risks have been identified, we need to construct well-grounded AI policies to help mitigate these risks. These Responsible AI policies act as guideposts for ethical, transparent, and effective use of AI technologies.

From an operating model perspective, the big question is how to achieve this responsibly. Recent regulatory measures, including the Biden Administration's Executive Order and the European Union's Artificial Intelligence Act, all aim to establish ethical AI frameworks and ensure Responsible AI usage. Meta's recent initiative to label AI-generated content draws attention to the growing need for transparency in AI applications – a move that could reshape content creation and distribution.

Where to Start

The recent Destinations International webinar featured a diverse group of panelists: Kim Young from Experience Grand Rapids, Altaz Valani from Info-Tech Research Group, and Vimal Vyas, CDME, from Visit Raleigh. They shared personal experiences and strategies for AI integration, focusing on creating awareness, engaging stakeholders, and drafting Responsible AI policies.

Kim Young highlighted proactive steps taken by Experience Grand Rapids to incorporate AI into its operational framework. This DMO is focused on creating a technology handbook that includes AI policies and ensuring all team members are aligned with the ethical use of AI tools.

Altaz Valani emphasized the importance of starting AI policy creation with informed use cases that align with business values and manage risks. This proactive approach helps organizations set a solid foundation for Responsible AI usage.

Vimal Vyas, CDME, discussed Visit Raleigh’s approach to leveraging AI for enhancing visitor engagement. This is done through a branded Destination Generative AI Experience Agent, which integrates seamlessly with existing CMS and CRM systems. It demonstrates how AI can provide tailored responses and improve user interactions while adhering to accessibility standards and Responsible AI principles of an operational framework.

Community Shared Values

Community Shared Values drive Responsible AI. They represent a shared set of values that drive how a business operates. Some examples are:

- Passion & Innovation: Encouraging a strong drive and commitment to continuously improve and innovate within AI applications.

- Awareness & Transparency: Ensuring that AI processes are understandable and decisions can be explained to promote awareness among all community members.

- Inclusion & Fairness: Actively working to eliminate biases in AI to create systems that are inclusive and equitable for all groups.

- Engagement & Accountability: Engaging with the community to maintain clear accountability mechanisms for AI, ensuring feedback and modifications are possible.

- Collaboration & Safety/Security: Enhancing collaboration across stakeholders to prioritize the safety and security of AI systems.

- Stewardship & Privacy: Upholding high standards for data privacy and protecting individual information aligns with the responsibility to manage community resources prudently.

- Relevance: Ensuring AI applications remain relevant to community needs and values.

This alignment not only ensures ethical AI practices but also enhances the overall effectiveness and acceptance of AI within community-oriented initiatives.

AI Guiding Principles

AI Guiding Principles map to the shared values described earlier. They represent a nuanced approach for all of the AI initiatives.

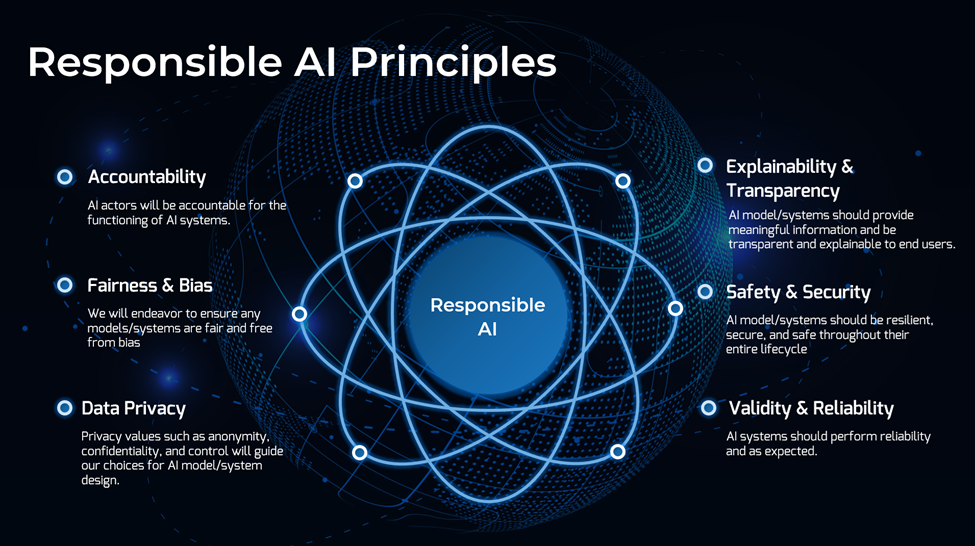

In the panel discussion, we also explored Responsible AI (RAI) guiding principles. These RAI principles make the community shared values operational in the AI domain by identifying the risk and policy creation aspects.

Examples of Responsible AI Principles

- Accountability is about maintaining clear accountability mechanisms for AI systems, ensuring that there is always a way to audit and modify AI behavior.

- Fairness is about working to eliminate biases in AI algorithms and ensure that AI systems do not discriminate against any group.

- Privacy is about upholding strict standards to protect the privacy of individuals, ensuring that AI systems comply with all applicable privacy laws and regulations.

- Transparency is about ensuring that AI processes are understandable by stakeholders and that decisions made by AI systems can be explained.

- Safety & Security is about prioritizing the safety and security of AI systems, protecting them from malicious uses and ensuring they operate as intended without causing harm.

- Validity & Reliability is about ensuring the AI systems perform as expected.

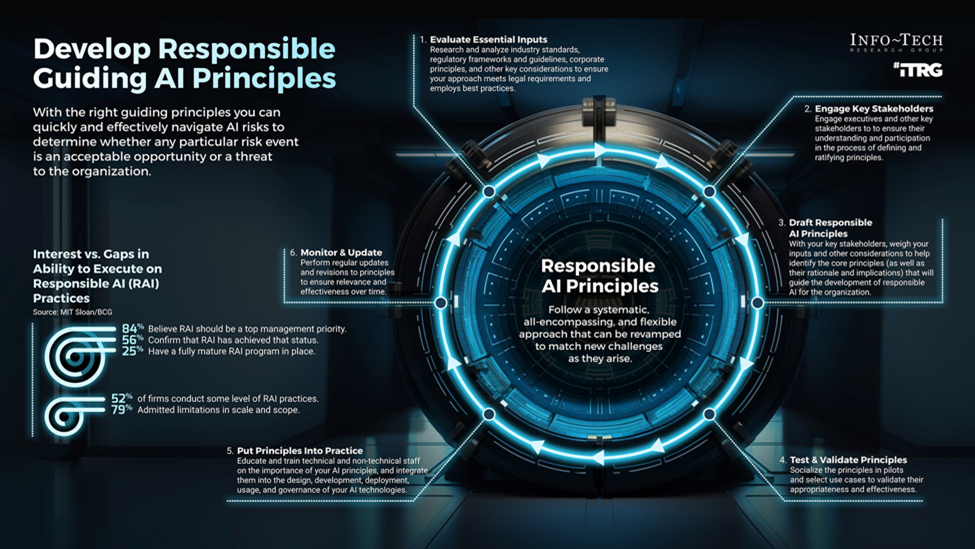

According to Info-Tech Research Group, developing Responsible AI principles should follow a structured process:

- Engage with key stakeholders to gather diverse perspectives;

- Align the AI principles with the organization’s core values and mission;

- Educate and train employees on these principles; and

- Regularly review and update the principles to ensure they remain relevant and effective in addressing new ethical challenges as AI technologies evolve.

For a detailed breakdown, you can visit the Info-Tech website here.

Creating AI Policies

The webinar panelists shared their insights around drafting AI policies. Two points emerged:

- Engage multiple stakeholders. In one example, the leadership team engaged in thorough discussions to understand AI implications fully and then developed a policy to address confidentiality, transparency, and compliance.

- Construct draft AI policies as a group. It involves integrating industry best practices with unique organizational needs. This approach reduces decision bias and helps ensure AI tools can support the policies. This ensures alignment with business goals while maintaining trust across groups: internal teams, visitors, partners, and stakeholders while aligning with business goals.

Conclusion

As AI continues to influence the destination marketing landscape, organizations need a strategy. Focus should be on responsible usage, stakeholder engagement, continuous learning, and trust. Insights from the webinar provide a roadmap for businesses to harness AI's potential. This allows organizations to remain competitive and innovative in the digital age.

Submit Your Thought Leadership

Share your thought leadership with the Destinations International team! Learn how to submit a case study, blog or other piece of content to DI.